A Map for AI Town Planners, 2024-29

AI has already speedrun through pioneer/settler phases to the town-planner phase

This essay is part of the Mediocre Computing series.

As many observers have noted, AI appears to be speedrunning the technological evolution curve. The Altman-OpenAI exit/return saga unfolding in days, compared to the Jobs-Apple exit/return saga unfolding over years, was a particularly dramatic motif for this observation. It is not entirely true, however. The ImageNet moment, which arguably marks the start of this historical epoch, was in 2012. That’s 12 years ago. I think what gives us this speedrunning sensation is that the curve was slower than normal in the first 10 years, and has been faster than normal in the last two years.

Another way (a kairos over chronos way) to think about this evolutionary history is in terms of Simon Wardley’s pioneers/settlers/town-planners 3-phase model. In terms of this model, I think we have already arrived at the transition to the town-planning phase. My own Labatutian-Lovecraftian-Ballardian 3-phase model of evolution in intellectual milieus parallels Wardley’s model. Intellectual currents and zeitgeist conditions tend to track the state of technological play quite closely.

Pioneer phases feature Labatutian milieus, full of mind-destroying glimpses of the never-before-experienced frontier (remember the creepy image era of Deep Dream and GANs?). Settler phases feature Lovecraftian milieus where mythic narratives and archetypes of horror begin to take cohere properly (shoggoths! stochastic parrots!). Finally, Town Planner phases feature Ballardian milieus, full of intellectual built environments with banal, familiar surfaces, and horrors tamed and/or banished underground to the sewers.

Getting to town planning and Ballardian milieus this quickly is unusual. Crypto for example, despite being 4 years older (the Satoshi Moment was 2008) seems to have been stuck in a late Settler/Lovecraftian phase for the last few years. It is struggling to switch gears to town planning and Ballardian banality.

I think the reason we’re here this quickly has little to do with the nature of the technology, and a lot to do with what it feeds on. Modern AI is heavily reliant on large-scale training data availability and intensive capital investment in GPUs. This means it has encountered the world of capital markets, older companies, legacy media, and the regulatory environment around copyright, much earlier than it might have otherwise. An appropriate comparison is to digital music and streaming video. Both of these, like AI, and unlike most technologies, got to town-planning phases in less than a decade because they needed access to unencumbered legacy data to scale past a point.

I like making maps of stuff, and in reviewing my own writing on AI over the last few years for an upcoming corporate punditry gig, it struck me that town planning would make a good metaphor for a map of the state of play in 2024.

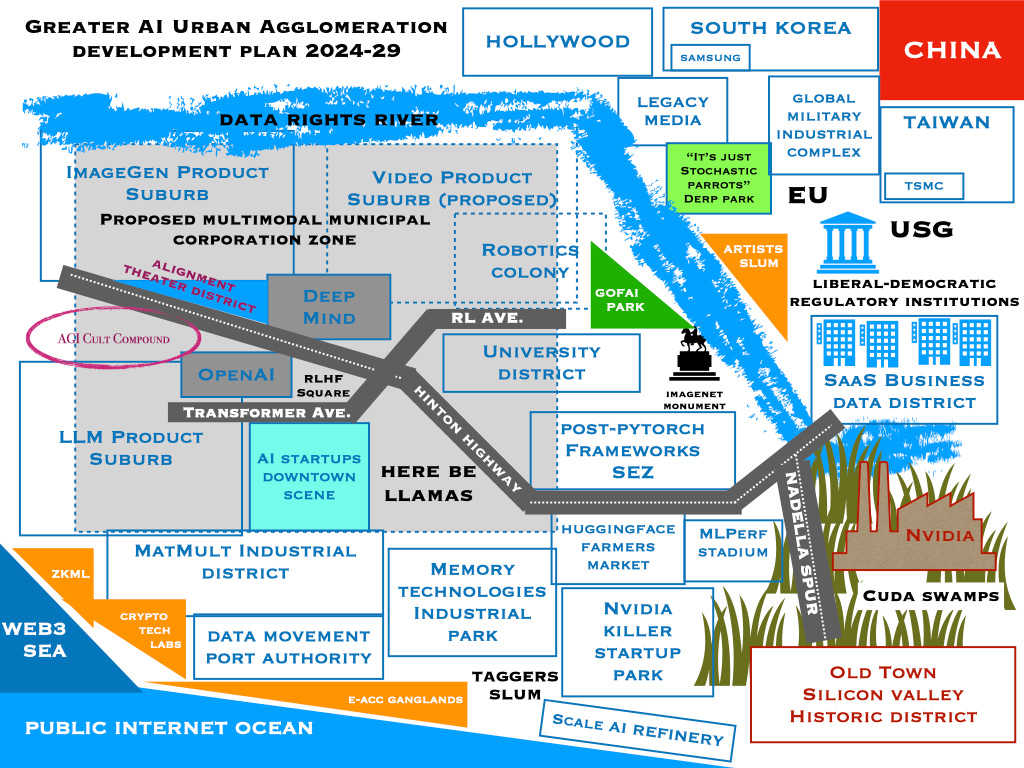

So without further ado, here is my AI town-planner’s map, 2024-29.

It’s rough, and I might improve and polish it (with a Ballardian aesthetic!) based on feedback over the next few months. The included elements are meant to be indicative of the urban geography rather than serve as an exhaustive inventory of everything that could and probably should be plotted on it.

For example, Midjourney and Stability.ai would in the startup downtown. The University District would feature frontier subfields and weird neuroscience side quest zones. I have not tried to squeeze in this level of detail. I have tried to size the major urban zones/district in proportion to how important I think they are, in shaping the history of this town-planning phase. I have tried to position them in the allegorically appropriate places, with the right metaphoric connotations.

So for example, every AI tech Morlock I talk to tells me that data movement at rack-scale is a critical and unsolved systems engineering problem that is rate-limiting progress (AI on one GPU is basically solved. AI on a 10,000 GPU cluster is currently painstaking and fragile artisan rigging work). So the size of the Data Movement Port Authority block represents my borrowed sense of the importance of that thread of activity. Someone will hopefully invent the AI equivalent of reinforced concrete and turn that whole block from an artisan-bureaucrat colony to a highly automated engineering yard.

A few notes.

Dotted-line areas are spaces where the thinking is kinda done, and we’re in an execution/build-out phase. I think it’s clear that robotics and video, for example, are going to be the next major dominoes to fall, and that it’s going to be approximately as mind-blowing as text and images have been so far. And it is already clear that multimodal AI is going to work well enough to be at least useful, and almost certainly very disruptive economically. This stuff is moving from basic research to development and commercialization. We’ve already priced in the expected surprise levels. This is known-unknown stuff on the roadmap.

Orange bits are where I expect to see more interesting unknown-unknown foundational thinking and experimentation to emerge in the next few years. As far as basic science stuff goes, ZKML (zero-knowledge machine learning) and cryptographic technologies in general are going to irrupt into the landscape in unexpected and consequential ways I think. They’ll form a data protection element to complement the data movement element. Regulated data movement (including in the form of weights with varying degrees of exfiltration vulnerability) is the future.

Cultural orange bits. The e/acc crowd, which I’ve plotted as a sort of rowdy waterfront district, is for the moment mostly annoying and noisy, but they have the right attitude to produce interesting thinking that goes beyond memes and slogans. The artists slum too, though currently mostly in panic mode and complaining about “theft” (like they haven’t been stealing from each other forever) and repeating the tediously simplistic stochastic-parrot derping from the progressive commentariat next door, is I think going to ultimately find the new medium simply too seductive to resist, and start doing more interesting things with it.

If AI is in a town-planning phase, then Sam Altman would definitely be its Robert Moses (though Jensen Huang, partly by accident, partly by prescience, owns much of the prime real estate). This thought got me thinking — who is going to be the Jane Jacobs of AI? It very well might be Justine Tunney, who released llamafile via Mozilla. More loosely, the Jacobsian milieu of AI is definitely the world taking shape around the leaked Llama weights from Meta, and the general culture of jailbroken, unbridled experimentation taking root there. If you want your local, organic, community-driven AI, that’s where you’d go look. I’ve located it next to the Huggingface farmer’s market, and the two together form the resistance movement to the OpenAI-Microsoft style development forces.

The only allegorical natural element in the map, besides the river, is the part labeled Cuda Swamps (Cuda is Nvidia’s low-level software, maintained and continuously refined by an army of their engineers, that enables everybody else to use their GPUs). I’m particularly pleased with this allegorical mapping. Should it be drained and cleared? Or is it an essential wetland without which the whole ecosystem doesn’t work at all? Nvidia would definitely like us all to buy the latter theory, but almost the entire industry is fervently hoping it can be drained and cleared and the low-level enclosure it represents turned into an equivalent public piece of the stack. At the very least, everyone hopes the boundaries of the Cuda swamp can be pushed back (see: OpenAI’s Triton for example). We’ll see how all that goes.

Thoughts on things that are missing and should be in there? Ideas for refactoring the layout in more insightful ways?

My own writing in the last year was definitely in Settler/Lovecraftian mode. I was focused on identifying the fundamental contours of the idea space, making sense of the emerging mythos around the technology, and developing my own taste and judgments. I feel like I’m almost done with scratching any Settler itches, and have a good sense of both the lay of the land and the social geography of the various milieus that have settled on it, and will be navigating the transition to the town planning phase in different ways. I’m personally ready to move on to the Town Planning phase, though I don’t quite know what that means for my writing. I’ve had enough of shoggoth memes and trolling the AGI alignment cult. It’s time for more serious and interesting things.

If you’re joining late, here’s a shortlist of my lighthouse posts that mark the evolution of my own thinking:

A Camera, Not an Engine: This has been the most popular and most recent post, and also features probably my most accessible arguments and most current framing. If you read only one thing in this whole series, this should probably be it.

Superhistory, Not Superintelligence: This was my first serious stab at writing about AI, back in May 2021. The core argument is that we should think of deep learning models as a kind of artificial time rather than artificial intelligence. I’d modify the argument slightly now and do a sort of time-as-memory argument, but the original post is not too far off from my current position.

Text is All You Need: This proposes a model of artificial personhood sufficient for humans to relate to, and argues that text is all you need to produce it, which has big practical and social implications.

Boundary Intelligence: This post, a repackaging of a 2017 thread about human intelligence, represents the current outer limit of my own thinking. The argument is simple to follow, but its implications are very murky indeed. Basically, the takeaway is that the power of an AI is entirely a function of its training data at the limit, and is independent of the sophistication of the processing past a point. This means managing the data boundary is the most important problem in leveling up to the next generation of AI. In terms of the concepts introduced here, it’s time to shift focus from Artificial Interior Intelligence (AII) to Artificial Boundary Intelligence (ABI).

Oozy Intelligence in Slow Time: This post, starting with a riff on Matt Webb’s “too cheap to meter” view of AI, builds out a view of all technology, but especially AI, as getting more “oozy.” This is I think the load-carrying prediction that a lot of my own expectations are based on, such as the idea that intelligence on the edge, in smaller form factors, will be much more important than people realize.

Can Robots Dream of Phantom Limbs?: I think I’m in the minority (probably due to my control theory/aerospace/robotics background) in believing that embodiment is going to be the essential driver and shaper of the future evolution of AI. Most AI people don’t think embodiment matters, either practically or philosophically. At the time I wrote this, I didn’t have a fully worked out idea of why I disagreed, but having connected the dots properly to boundary intelligence, I am beginning to work it out properly. Expect a post along this vector soon (high-ABI robots?)

The Dawn of Mediocre Computing: Finally, much of my writing on AI is a thread within a broader framing I’ve been developing of the future of computing as a whole that I call Mediocre Computing. This frame includes, besides AI, 3 other key threads: crypto, robotics, and metaverse technologies. I think this whole is much more than the sum of the parts, and it may help to get a sense of this larger frame if some of my arguments about AI specifically sound unmotivated to you.

I’ve unpaywalled all these posts. All are part of my Mediocre Computing series, which has a lot more posts, but if you’re only interested in my writing on AI, this subset should get you caught up enough to follow my writing in 2024.

And I look forward to ideas for improving the map.