Courage in Computing

Three styles of modern computing

This essay is part of the Mediocre Computing series

One of my favorite anecdotes (possibly apocryphal) from computing history concerns the Ada programming language. The language apparently had (has?) no clear semantics for terminating and exiting a program because it was designed to be used for embedded control of missiles. The program would end when the missile blew up. A delightful illustration of parsimonious YAGNI (you ain’t gonna need it) design thinking. I’m not sure how true this was or still is (versions of Ada are still around), but this sort of “crash-only” programming language design, lacking clean default termination semantics, is an example of what I think of as “courage in computing.” Courageous computing is computing designed to be aware of, and pragmatically responsive to, the inescapable constraints and risks of the real world, in all its messy glory.

This is not courage in the programmer or human operator of a program, but courage intrinsic to the design of the computing system itself. Any sort of engineering design that allows for, or expects, dangerous things to happen has courage built into the medium of the message in the form of systemic risk-management predispositions. A missile has that built into not just the design of the guidance computer and program, but obviously into the physical body itself: it is a bomb mounted on a tube that is itself full of explosive propellant. Or to take a simpler example, a knife without a live edge can’t cut you, but is also useless (a knife with a live but dull edge is the worst of both worlds — bad at cutting things you want cut, likelier to cut things you don’t want cut).

A more familiar computing example is C, with its laissez-faire attitude to memory management. You can do whatever you want with pointers and memory, including very dangerous things. This design was partly driven by the state of computing when C was invented. Memory limitations meant the only kind of efficient programming possible was a dangerous kind, requiring programmers to do active memory management. Kinda like how before the invention of dynamite, much more temperamental explosives had to be used for construction.

As memory got cheaper, what are known as garbage-collected languages (which do memory management for you, making programming simpler and safer, but less efficient) became more popular. The Rust programming language tries to have its cake and eat it too, supposedly allowing programmers to write safe but efficient low-level code, but as Linus Torvalds pointed out in one of his famous rants, this is to some extent wishful thinking. An AI-relevant wrinkle in this story is that memory never did get cheaper close to the processor. In fact it has gotten much more expensive in relative terms, which is why low-level programming, which has to deal with limits on high-bandwidth memory close to the processor, still has an old-fashioned flavor to it.

Like missile-guidance computing, kernel computing (the lowest level) is fundamentally too close to the world of atoms to accommodate wishful and expensive illusions of safety (or things that masquerades as, such as nearly unlimited memory). Kernel computing, missile guidance, and construction explosives are fundamentally dangerous things, and there’s only so much safety you can or should attempt to design in.

Why? Because the world is a dull, dirty, and dangerous place, and things that prevent you from being alive to that fact are not actually good for you, no matter how nice they feel. When your tools don’t embody sufficient courage to deal with the world as it exists, your courage eventually atrophies too.

A Dull, Dirty, Dangerous World

It is increasingly sinking in for me that what I’m calling mediocre computing is not just a coherent and viable philosophy of computing, it is in fact the dominant philosophy of computing as it actually exists and has been successfully practiced since the beginning. A philosophy designed for a “dull, dirty, and dangerous” world, as 2000s military doctrines put it.

And this philosophy is a fundamentally courageous one that continues to be the dominant and successful one at the frontiers of computing today.

Courageous does not mean foolhardy. Mediocre computing is aware of and responsive to risks, and predisposed to build in mechanisms to accommodate it. Conservative where necessary, liberal where possible, and with a deliberate and conscious approach to risk management, including risk of death and explosions.

A subtle effect of these predispositions is that mediocre computing is also often very boring. It does a lot of housekeeping and chores. It performs a lot of routine maintenance, checking, testing, and so on. This is because if you’re courageous, you want to consciously take on risk where it actually matters and there’s an upside to it. You don’t want sloppiness to move risk around in ways that makes the courage futile. A missile that blows up on the launch pad is useless, so you over-engineer the boring stuff to make sure it doesn’t.

It is no accident that you find the best examples of courageous computing in the “dull, dirty, and dangerous” world of military technology, where you have to do a lot of boring chores, take on a lot of risk, and deal with the banal messiness of real life. Long stretches of boredom punctuated by moments of panic and/or excitement.

Mediocre computing solves for courage in this sense of facing up to the dull, dirty, and dangerous world, while accepting the possibility of failure scenarios and real costs. I would argue that if you took an inventory of all the code out there in the world, of all sorts, deployed successfully into production, you’d find that 90% embodies this philosophy. You’ll find that most of the discarded and forgotten or never-written-vaporware code fits more wishful philosophies of computing.

Which brings me to two currently popular wishful philosophies of computing that I think are both wrong and doomed to irrelevance.

Wishful Computing

Right now, all the discourse, not just around AI but also around the other frontier genres of computing I’ve been talking about — crypto, metaverse, robotics etc. — is dominated by two philosophies that account for very little of the technology itself, but almost all the overwrought commentary.

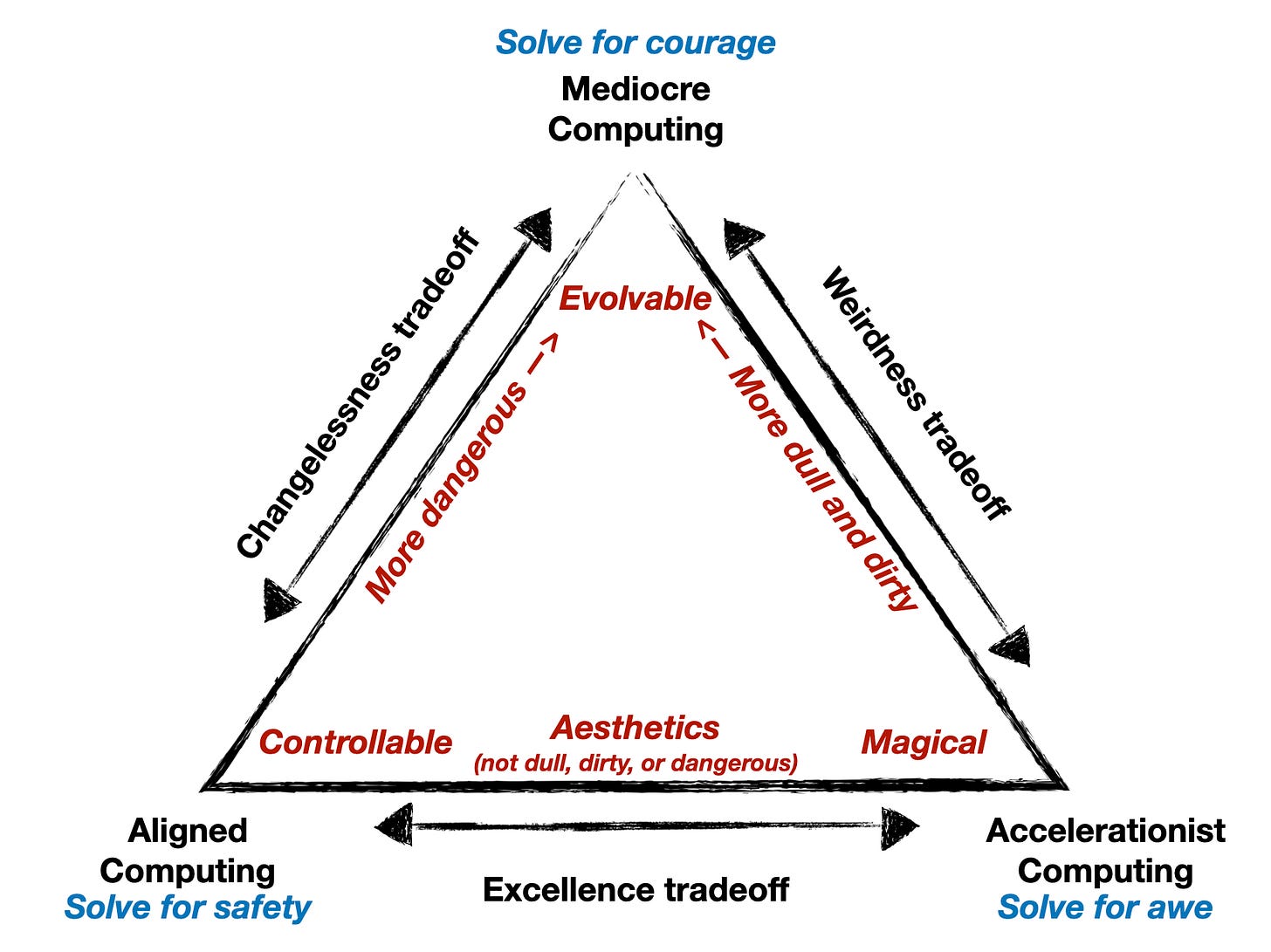

These are what I think of as aligned computing and accelerationist computing. Here is a triangle diagram representing the three philosophies. The two at the bottom are the loud and discourse-y wishful computing philosophies.

They are wishful in different ways.

Aligned computing wants the world to be safer for an unchanging human condition than it is or can be (or in my opinion, should be), doing away with all the danger. It is basically Luddism rebottled.

Accelerationist computing wants the world to be more exciting and dramatic than it is or can be (or in my opinion, should be), doing away with all the dull and dirty parts that slow things down. It is a kind of intoxicated neo-paganism.

The broad insight underlying mediocre computing is that while the world can be made safer and more interesting, it cannot be made arbitrarily safer and endlessly interesting, so you have to proceed with some mix of reserves, caution, and acceptance of dullness, dirtiness, and danger. This is why I go on like a broken record about things like embodiment, situatedness, and friction.

One engineering symptom of this is that the tools get progressively less “safe” and “fun” as you get closer to the world of atoms, because the designers of courageous systems are not willing to sacrifice other desirable attributes such as performance, expressivity, and evolvability for safety or interestingness.

Assembly is more dangerous and less fun than C. C is more dangerous and less fun than Rust. Rust is more dangerous and less fun than Ruby (disclosure: I’ve never tried Rust, but have tried the other three to limited levels). As you approach the silicon, the world gets less safe and fun. You have to deal with more of the dull, dirty, and dangerous aspects. The good news of course, is that anything you do manage to do successfully at the lower levels provides you unreasonable leverage at higher levels for things that are safe and fun. C may not be either safe or fun, but video games written on stacks that rest on C can be both.

Other engineering domains approach subtler kinds of safety boundaries. While Solidity (the language used for programming Ethereum) is a high-level language that is used for highly abstract things like smart contracts, it is very close to the “bare metal” of finance. Atomic operations are unsafe in the sense that they are irreversible because blockchains are what they are, and deal with real money that can cause life-changing consequences for the human owners of that money. But once you’ve written good blockchain code that deals with the dull, dirty, and dangerous aspects of blockchain programming, you can have fun with NFT-based on-chain gaming at the top.

The closer you get to the atoms that constitute it, the less the world will respect any sort of wishful thinking that aims to solve for naively human-centric outcomes like safety or fun.

The wishfulness is obvious with aligned computing, which aims to create controllable forms of computing that don’t “escape” to evolve in wild ways, but is curiously also true of accelerationist computing, which fetishizes an exciting and magical kind of transhumanist co-evolution with magical and god-like computers.

It is perhaps an obvious point, but it’s worth saying it explicitly: transhumanism is just as anthropocentric as humanism. When technologies are allowed to evolve by their own logic, not only do nostalgic desires for a changeless and eternal human condition get frustrated, so do manic desires for endless exciting change. The locus of evolutionary logic shifts. Humans are no longer the center of the evolutionary process. Things can get dull and dirty at human loci. Just because you don’t get the changeless stability the alignment types want, doesn’t mean you’ll get the endless excitement the accelerationists want.

In the triangle diagram, I have tried to represent this via the two trade-off boundaries that converge on evolvability. Mediocre computing ultimately solves for the evolvability. The trial-and-error driven by courageous computing, with all its tediousness and failures, ensures that the infinite game of technology can go on. That more capable systems will keep arising out of the ashes of less capable ones.

If you fetishize humanistic alignment, you will have to give up some changeless safety in a static human condition.

If you fetishize exciting transhumanist journeys, you’ll have to give up some magic focused on feeding your appetitite for interestingness, and accept a certain amount of dullness and dirtiness.

Again, both alignment computing and accelerationist computing are theoretical philosophies. Not much actual code is out there embodying either of those philosophies, and even less code doing so with any degree of adaptive, evolutionary success. The two philosophies argue about human-centric notions of computing excellence with each other, but don’t actually do a whole lot of computing. They are totalizing aesthetics of computer use more than they are philosophies, as I have tried to illustrate on the bottom edge of the triangle, and rarely seem to rise above the level of art projects or design fictions.

Now that we’ve got that set up, what does courage in computing look like at the frontiers today? Let’s start with machine learning, which is once again predictably dominating the discourse.

So what is courage in machine learning?

Keep reading with a 7-day free trial

Subscribe to Contraptions to keep reading this post and get 7 days of free access to the full post archives.