Texts as Toys

Writing is now toy-making, reading is now playing with toys

In 2009, a couple of years into my blogging career, as I began finding my feet, I wrote a post that was very popular at the time, The Rhetoric of the Hyperlink. I think I’m finally finding my feet with LLMs, a dozen sloptraptions in, and have developed a comparable mental model for reading and writing with AI.

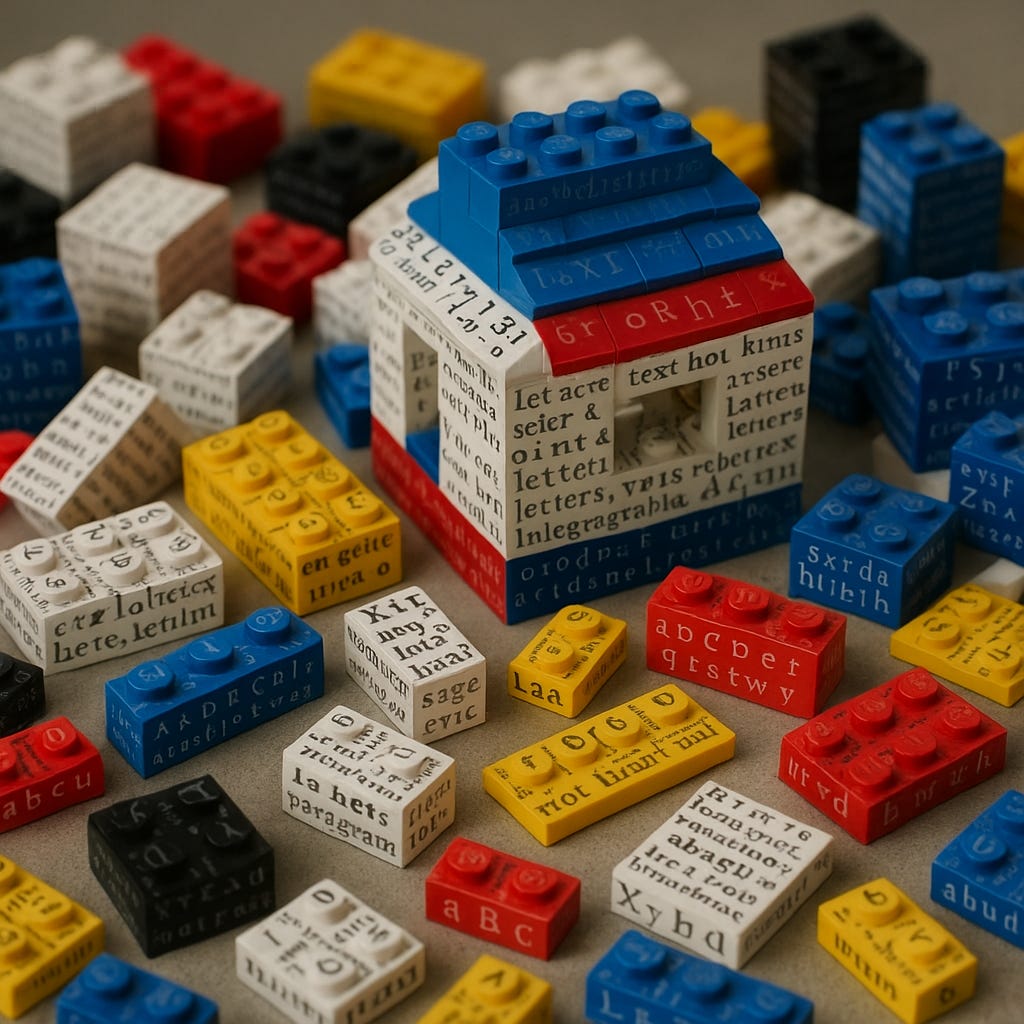

The essential mental model is that of texts as toys, and LLMs as technologies that help you make and play with text-toys.

Writing is now toy-making, and reading is playing with toys. The textuality of the AI era is to pre-AI textualities as video-games are to screen media.

The big thing to understand about pre-AI text culture is that reading and writing are both relaxing, pleasurable activities for those who do a lot of either or both. They are ludic immersion activities.

The ludic quality of engaging with texts is one thing I am now highly confident will not change. The major strength of the texts as toys mental model is precisely that it not only preserves this ludic quality, it actually strengthens and centers it.

By contrast, many mental models of AI treat it as a purely instrumental, functional technology, with no particular affective disposition. No particular mood, let alone a subtle one like playfulness.

AI as Ludic Technology

Even a few hours of playing — that’s the operative verb — with AI reveals something that fearful critiques from a distance seems to miss. The primary mood of the medium is playfulness. The message of the medium, at its current stage of evolution at least, is let’s play.

AI is a ludic technology.

I haven’t had this much fun with a new technology since I discovered Legos as a kid. Why then, do so many people seem entirely oblivious to the ludic aspect of AI?

I think there are three reasons: threat to humanness, inexperience with command, and overly realistic expectations.

Threat to Humanness

AI sufficiently threatens notions of humanness that playfulness vanishes from the human-AI interaction not because it is missing in the technology, but because it is being deliberately or unconsciously resisted by the human.

Human users of AI seem to approach it with an unusual seriousness. It is hard to play with a technology when you approach with the idea that you are dealing with a world-destroying monster that’s out to devour your humanity. It is more natural to approach it in a metaphorical hazmat suit, in a high state of stress and wariness, armed with suitable weapons.

And because by its very nature, AIs mirror the attitudes of the humans shaping their contexts, the playfulness gets repressed in the responses as well.

This response reminds me of my own response to large dogs as a kid. I was scared enough of them, I never got comfortable playing with them. I’ve gotten over it as an adult, but still prefer cats.

Inexperience with Command

The second reason is subtler. The ludic quality gets missed because people inexperienced with command have no idea how to approach it as a collaborative dialogue that can be fun, rather than a contest of wills that must feel like Serious Adult Dominance (SAD) when done right.

Much of what AIs do is hidden from view, and not part of the human-interaction layers. It is also activity at a scale most humans are not used to supervising. Even if it responds with an apparently individual voice, an AI at the other end of a chat is orchestrating a set of resources that is closer to a team of humans. So it is perhaps not surprising that evidence is emerging for a rather curious phenomenon: Somewhat older people are, uncharacteristically, taking to AI better than younger people.

People inexperienced with command seem to imagine it as a distant imperative stance of open-loop supervisory direction-giving that brooks no dissent (even though it never worked well on them). They seem to have oddly terrifying, autocratic ideas about it. One gets the impression that they approach AI with the imperious air of Pharaoh Rameses in The Ten Commandments: “So let it be written; so let it be done.”

Supervision of any agent, even a literal slave, does not work like that. AIs are no exception. It’s hard to coax playfulness out of even the most playful entity if you’re trying to dominate it like a Pharaoh.

July is side-quest month at the Contraptions Book club, where you get to pick your own book within the broad theme of 1200-1600 CE horizontal history. Chat thread here. For August, pick one of Canterbury Tales, Decameron, Divine Comedy, and Don Quixote. Pick your edition/translation carefully.

Overly Realistic Expectations

Toys, like any other medium for modeling reality, simplify some aspects, exaggerate some, hallucinate some, and introduce affordances that are a result of toys being toys.

Toy cars are vastly simpler than real cars, but tend to come in a wildly exaggerated range of ridiculous shapes and colors. They feature hallucinated elements that don’t exist on real cars, such as googly eyes. They might feature wind-up mechanisms that don’t exist on real cars, but serve as affordances for play.

Serious adult humans tend to be sensitive to misregistrations between reality and models when it comes to serious modeling media. We realize mathematical equations don’t capture all the phenomenology they aim to model. We understand that maps are not territories. We even understand that the abstractions of language in pre-AI textualities constitute an expansive commons model of reality. Words don’t exactly capture they realities they point to. We understand the meaning-pointing distinction. We talk of fingers pointing at moons.

Playful humans, from infancy on, also tend to be sensitive to such misregistrations, but via a different ludic sensitivity. Even a three-year-old understands that the toy car is not the real car, and that a certain amount of imaginative make-believe is necessary to engage with it. You act as if it is a real car, for fun.

Somehow, even though AI is also a modeling technology, we don’t observe our usual epistemic hygiene practices. We act shocked, shocked that an AI can do solve genius-grade math problems but make trivial common sense errors elsewhere.

But we are not shocked when we land in a new country and find that it is not in fact entirely pink and one inch across the way it is on the paper map.

We are not shocked to discover that contra economists’ mathematical models, cows are not in fact spherical and do not live in vacuum.

Our expectations of AI end up mismatched with reality not because it is particularly poor as a modeling medium, but because the misregistrations arise from its toy-like nature. AI models are “wrong” about reality in the same ways toys are “wrong” about reality.

A model of a real rocket, for example, might be highly realistic in some ways. With the right kind of trick photography, you might even be fooled into thinking it’s the real thing. But then if you examine other aspects, you’ll find weird “mistakes.” It is made of plastic, not metal! The human capsule is way too small to hold humans!

To have the right expectations of AI outputs, we must approach it not just as a modeling medium, but as a toy-like modeling medium. The misregistrations are going to be the misregistrations of toys. Not those of serious adult modeling technologies like maps and mathematics.

Instrumental Reductiveness

Exacerbating these three factors in the case of text in particular (we’re better able to treat image generation as a “toy” technology) is that most humans have — and there is no kind way to say this — a fucked-up relationship with text.

So LLMs are a kind of AI that I think a lot of people who used to have weak or non-existent relationships to text, pre-AI, deeply misunderstand.

Because LLMs allow those who find no joy in the ludic affordances of text to still develop radically superior instrumental relationships with text, they (and the critics watching them) make the critical error of thinking that the ludic quality can be dispensed with entirely. They use LLMs in ways that suggest an intent to reduce text to some sort of pure industrial intermediate that will eventually sink out of sight beneath more sensorial modes of information processing.

I am now convinced this won’t happen. The ludic qualities of both text, and AI as a technology, are load-bearing at the human interaction level. You must play to unleash their potentialities. While LLMs may well develop their own cryptic latent languages to communicate among themselves, that does not mean text will disappear between humans, or between humans and machines. A highly expressive symbol system with a grammar is a necessary part of both human-human and human-machine relations.

It is important to note that the ludic element plays a major role even in purely functional and instrumental reading and writing. Examples include:

Reading or writing a brief or report to prepare for a meeting

Studying a textbook and making notes for an exam

Reviewing a paper to write a peer review

Composing text strings for user interfaces

All these types of reading and writing can be highly pleasurable and relaxing if the relationship to the text is ludic on both ends (and you’re at the appropriate level of literacy in the particular subject or genre). In fact the relationship must be a ludic one to access the most powerful potentialities.

Much of the aesthetic pain of dealing with “business writing” is the result of the fact that most people producing it are bad at having fun with text. The ability to have fun with text is a kind of athletic ability. If you suck at a sport, it’s hard to have fun with it; to play the sport. So to the extent AI empowers those who are bad at having fun with text, textual cultures and technologies can start to lose their playful aspect.

That, then sets the stage. AI is a ludic technology that must be engaged with in ludic ways. This means counterprogramming the three instinctive attitudes that work against playfulness — perceived threat to humanness, inexperience with command, and overly realistic expectations. And beyond that, we have to account for the fact that AI vastly increases the textual agency of humans who, through some mix of lack of aptitude and poor nurture, never learned to have fun with text.

With that preamble out of the way, let’s talk about making and playing with text toys.

Making Text Toys

There is a lot to be said about the writing, or “toy-making” aspect, and much of it is primarily of interest to other writers. Most people who enjoy playing with Legos do not appreciate the very different pleasures of nerding out over injection molding, the thermal properties of ABS, and industrial dye chemistry. While some aspects of designing Lego kits overlaps with playing with Legos, there is an irreducible maker-only side to any toy.

Players though, should have at least a minimal understanding of the toy-making side in order to cultivate their tastes and capacities for ludic immersion beyond a point. You can’t have the right expectations and relationships with a thing if you don’t quite know what it is or how it was made. Most adult fans of Lego know, for instance, about the very tight tolerances of the bricks, and the rules for legal and illegal builds that emerge from stress/strain limits on the material.

But for the writer/toy-maker, the ludic aspect must extend to the irreducible toy-maker side. Presumably, engineers at Lego do have fun nerding out over injection molding.

In 6 months of experiments with LLMs, I’ve radically improved my ability to have real fun with the toy-making. In fact, it’s the main thing I solve for, rather than quality of output. The technology is evolving at a blistering pace, but I only adopt and experiment with capabilities when I find a ludic angle. This is one reason I haven’t experimented much with system prompts or “professional” tooling. That feels like chores comparable to putting toys away neatly.

Authoring with LLMs is a very different kind of fun than without, and the reason I am able to enjoy both is probably that I am also an engineer. It is nothing as crude as ambidexterity across the belligerent wordcel-shape-rotator dichotomy, but there is admittedly some truth to that lens. More on this later.

This differences in the ludic qualities of pre-AI and AI textualities leads me to make a prediction: The people who will be enjoy writing with LLMs will not be the same kinds of people who enjoy writing without them, except by accident, as in my case.

You see this with every major technology. Let’s use ship-building as an example, focusing specifically on the ludic aspect of the transition from sail to steam for builders.

New Ludicities of Making

Yeah, I made up a word. I didn’t misspell lucidity.

Ludicity is the ludic quality of an embodied behavior or modality. In this case, text-toy making. You’re allowed to make up words if you’re in the business, just as Lego engineers are allowed to make up new parts.

People who were skilled at the trades and crafts related to making wooden sailing ships tended not to be the ones who got into, and got good at, building steel steamships. One reason was that the ludicities of the two industries were different.

Here’s my potted history of the shift (It is a toy model of how technology transitions transform the ludicities, so don’t treat it as a rigorous history).

In the overlap era (a few decades in the late 19th century), some shipbuilding craftspeople gave up and retreated along with the sailing-ship industry, as it shrank to a marginal luxury industry, generally focused on much smaller vessels. Others adopted the new modes, but stuck to old ways, never learning to either take pleasure in the new materials, tools and techniques — steel, coal, oil, welding, riveting, boiler-construction — or use them in particularly literate and skilled ways. They wrapped up their careers with a joyless and unrewarding last chapter, complaining about the decline and fall of civilization due to the brutish new technology, and unshakably nostalgic for the Age of Sail.

And a final, sufficiently supple-minded or accidentally prepared group developed a new ludic relationship with ship-building.

The three legacy groups were selected out, adversely selected (due to being grandfathered in), or adaptively suited to the new technological mode and ludicity.

We can (notionally and provisionally) guess that the three groups constituted roughly a third each, and that the breakdown generally correlates with both age and maturity in the older skills (cf: Douglas Adams’ three laws of technology adoption). This means two thirds of the working population of ship-builders cycled out with more or less grace, and a third pivoted (typically early in their careers) to the new way of building ships, and were joined by “steel-steamship-native” shipbuilders born into the new age, who never learned much about the old ways at all, modulo historical curiosity and nostalgia.

What both the pivoters and new-native types shared though, was a capacity for ludic immersion in the new ways.

Profiling Early Text-Toy Makers

The ship-building analogy suggests a perhaps too-simple first-order prediction about who the writers of the LLM era will be.

A third of pre-LLM writers will simply retreat to whatever shrunken marginal scene remains around exclusively hand-crafted writing, comparable perhaps to calligraphers after the introduction of print, or typesetters after the invention of desktop publishing. The specific elements of the craft they happen to be attached to may survive the transition, but the importance of those elements will not. Note that while all current writers will continue to also write without AIs (as I am doing with this essay), this group is people who refuse to use LLMs. Who reject the new ludicity. If you love walking too much, you might refuse to learn to drive.

Another third will try valiantly to “adopt” AI-assisted writing, but will suck at it, and never quite acquire the toy-maker mindset. They will be too stuck in pre-AI textualities, and never develop fluency with AI-era textualities. Presumably not every skilled calligrapher in the 1450s who tried to transition got equally good at type design.

And the last third will pivot and learn to enjoy toy-making, and do increasing amounts of it. These are the ones we are interested in. I count myself in this set.

Kids under five today will never learn the old way of relating to text production at all. Their relationship to text making, whether ludic or joyless, will be mediated by AI. Even if they anachronistically join the hand-crafted only crowd, they will be situated in an AI era. They will never experience what living generations have memories of — a world without AI.

But there are wrinkles to the standard trajectory with AI. The analogy to ship-building does not quite work.

Because AI is very much a management technology, some maturity and experience with command is extremely valuable. So the age correlation might be different. Those who pivot and learn to enjoy toy-making may be middle-aged like me rather than young. Not only do we typically have more experience with supervision, we also appreciate the ability to delegate tasks we are no longer as skilled at as we used to be. Where we’ve plateaued and perhaps even begun to decline. Younger people typically experience some anxiety around delegating tasks calling for skills they are still developing at an ego-validating rate.

With writing, I’ll freely admit that though I’ve acquired a lot of experience, and am still growing in some ways, there are definitely many aspects of craft where I’ve simply lost my edge due to aging or not caring enough, and many areas where I’ve plateaued.

My verbal acuity has weakened. My vocabulary is starting to shrink and simplify. My metaphors aren’t born as naturally sharp. My memory capacity for a vast store of random information, very useful for pre-AI writing, has deteriorated. My wife complains I’m no longer a walking Wikipedia like I used to be.

So I appreciate the ability to step back and let AIs do all the things I used to be better at, in ways younger writers may not.

The AI Authoring Experience

What is text-toy making like? What exactly is pleasurable about it? I’m only just beginning to glimpse the answer: It feels like the pleasure of engineering.

Specifically, the engineering of toys, ie toy-making.

As a happy accident, this is the main kind of engineering I have direct experience with. Most “building” in academic engineering research as well as industrial research is toy-making. We might give it a higher-gravitas name (prototyping, proof-of-concept, test build, design study, mock-up, wireframes, pseudocode), but it is all toy-making, marked by a freedom from “production” concerns required to “deploy” engineered artifacts into real-world use. We even use words like “sandbox” to gesture at the toy-like aspect.

When things I’ve built have been deployed to production, it’s been other people who hardened it enough to survive. People able to tap into the ludic possibilities of the world beyond engineering sandboxes. Today, I don’t even do production-intent engineering. It’s all hobby grade. I get introspective value out of it as a technology consultant and writer, but the actual things I build (such as rovers) are unlikely to get deployed to production.

An important difference between engineering toys and text toys though, is that the iteration loops are much tighter than in traditional or even software engineering toy-making. It is close to the rewrite/edit loop in traditional writing.

Building mechanical and electrical engineering toys, using 3d printers and breadboards, can take hours to weeks per iteration. Building software toys in Matlab (a particularly toy-like sandboxed research programming environment) can still involve iteration loops on the order of minutes to hours.

When making text toys with LLMs, the iteration loop is on the order of seconds to minutes, which is about the same as the fastest editing/reworking loop you experience in manual writing.

Non-writers often have the idea that we write complete “drafts” and then “rewrite” them. No. That might have been true of the typewriter or longhand eras, but rewriting and editing are fractally continuous down to the word level when you write on a computer.

We still think in drafts because older technologies could not support us below a certain level of resolution (as you’ll know if you’ve ever printed out and marked up a numbered draft with a red pen, something I haven’t done in 15 years).

Finding Ludic Flow With LLMs

One reason I never became a “production” engineer building “real” things is that as things get more real, rework loops get longer, high-frequency dopamine hit activities get less common, and you have to cultivate both patience for bigger payoffs and develop more complex skills to deliver the high-frequency dopamine hits in production regimes. For example, if you’re good enough at soldering, you can find a ludic flow to it. I briefly had this when I worked in a lab in the late 90s. I no longer do. Soldering is influent and painful for me now. Like one-finger typing. And most of the payoff requires turning on the finished circuit.

Text though, is the rare engineering material where no matter how “serious” and “production-grade” things get, finding high-frequency dopamine loops in the process remains relatively easy. Even when you’re working on larger-scale works like books, with slow ludic payoff schedules, or dry business writing with naturally low levels of fun potential at the level of words and sentences.

In other words, viewed as an engineering medium, whether you’re making toys or production-grade things, it is naturally easier to find ludic flow with text, in the sense of Mihaly Csikzentmihalyi, than it is with 3d printers, soldering irons, or IDEs.

Truth be told, this is the reason I switched from engineering to writing. I like that it’s easier to find flow with fewer specialized skills, regardless of how “serious” the task. It is always possible to play with text. It’s like food that way.

Of course, since I’ve authored several million words (not counting functional communication like emails or texts), possibly I’m just more practiced at finding ludic flow at any level of seriousness and money-making consequentiality. Many people who want to write complain that they cannot get started, or find it excruciating. They seem to struggle to find flow. Especially as it gets more serious, and there’s money in the picture.

But other things being equal, I strongly suspect it’s much easier to find flow with text than with say electronics or mechanical fabrication. The tools are simpler, the loops are tighter, the worlds are more hermetically sealed off from reality.

Supervisory Ludicity

There’s a possible objection here. Maybe you cannot be good at prompting good text out of an LLM if you haven’t written a million or two words on your own first?

It’s a tempting (and to some, reassuring) idea, but one that I am pretty sure is wrong. I bet though, that some parents and educationists will demand that LLM-use be strongly restricted until far too late. I trust the kids will get around such silliness, as they always do.

The thing is, acquiring supervisory ludicity in relation to a medium is a much quicker process than learning to work hands-on with it. Just because people with such skills and tastes today tend to be older (an artifact of needing to be a certain age before other humans will let you boss them around) doesn’t mean they have to be.

I’m not much of a programmer for example, but I’ve managed very good hardcore programmers, and done it reasonably well, I like to think. At least they didn’t try to murder me, and mostly did what I asked, and mostly agreed with my reasoning for asking. The key to managing programmers well, for me, was that I know just enough about it to not make an ass of myself, and actually enjoy talking about code, software architecture, etc. with working programmers.

My ludic engagement with code is not hands-on, but it is ludic nevertheless, and more than spectatorial. I may not be a software producer, but I’m more than a pure consumer who can only see and complain about UI elements. I can hold up my end of a reasonably literate conversation with real programmers that might even be useful to them. I can claim a supervisory ludicity in relation to programming. I can not only tell programmers what to do and have them listen without murdering me, I can genuinely enjoy it.

It only took me a few years to acquire a useful level of supervisory ludicity around programming. I didn’t have to personally write a million lines of code to get there.

Young people learning to “write” with AIs will get there just as fast.

Reading While Writing

Reading works in progress, from highly fragmentary notes to near-finished drafts, is a part of any mode of text production, whether manual, or LLM-assisted. Ludic immersion requires that you actually enjoy doing this. It’s not something you grit your teeth and get through.

This is not like chatting. Using an LLM as a chat partner is easy to make pleasurable and playful if you’re not entirely devoid of imagination. Using LLMs to produce text toys for others to play with is another matter.

To the extent text-toy-making inherits the fundamentally supervisory nature of working with AI, you have to enjoy reading the way an editor enjoys reading the drafts of other writers at various skill levels (especially higher than their own), and at various stages of completeness. Except you have to do that in a texts as toys mode.

If you think poorly produced AI writing can be a pain to read, you can imagine how much more of a pain it can be while the text toy is still under construction, unless you develop enough supervisory ludicity.

It takes some time to learn to derive a craft-like pleasure in observing the mechanics of a complex text toy coming together in a chat session. It’s not the same pleasure as you might find in typing the words yourself or reading.

The two are not mutually exclusive, but are definitely different. Reading-while-prompting is different from just reading-while-writing or just reading.

Playing With Text Toys

By reading with LLMs, I mean either loading a specific text into an LLM and accessing it indirectly (interrogative play), or using an LLM on a parallel track to support direct reading (perspectival play), with the relative loading of each mode being a function of your expertise and/or enjoyment level in the subject.

Within the texts as toys mental model, either mode of reading with LLMs must be fun. I’m beating this point to death for a reason. AI is a ludic technology. If you’re not having fun with it, playing with it, you’re doing it wrong.

As readers, just as with pre-LLM texts, pleasure comes first. Function and instrumental value come later, if at all. And this is as true of LLM-assisted business memos as LLM-assisted short stories.

Slop can be defined as texts that make ludic immersion hard or impossible to sustain, either because the writer didn’t intend to support it, or tried and failed. It has nothing in particular to do with AI. Slop has existed as long as writing itself has.

In a mature technological era, such as pre-LLM novels or screenplays, slop is almost entirely the writer’s fault. In an emerging technological era, it is often equally the fault of the reader, for not trying to learn to play in new ways. If you expect to enjoy video games with Lego-play skills (even a close analog like Minecraft), you’re going to have a bad time and blame the toymakers.

Let’s unpack the play-skills required to engage with texts as toys.

The first skill is a version of the “make-believe” aspect of playing with toys — or to use a big word, recoding.

Recoding While Reading

There is always some recoding going on when you read, even if you’re not aware of it. In AI-speak, you’re embedding the work in your own context, and inevitably applying some perceptual filters in the process. When these filters constitute a conscious stance that characterizes you as a reader, you’re doing non-trivial, and perhaps skillful kinds of recoding. You’re reading as X.

By adversarially recoding a work within the reading experience, you can often manufacture ludic immersion despite lack of support from the original text.

This is the mode of camp for example (cf. Susan Sontag “failed seriousness”), or more recently, hate-reading. Or debunk-reading. Or bullshit-detection reading. Or fraud-detection reading. Or plagiarism-detection reading.

A lot of people are having this kind of fun with LLM-generated texts today. There is a certain sort of low-grade pleasure to be had in reading slop in order to take note of (and post about) all the hallmarks of inexpert toy-making, such as sycophancy, overused phrasal templates (“It’s not about X, it’s about Y”), and so forth, that take some skill and experience to get past.

This kind of adversarial recoding pleasure is fine, and is perhaps an entry drug into finer modes of appreciation, but is not what I’m interested in. At least not right now. I might get interested in it when the technology and craft improve, but right now it feels silly, like finding pleasure in the clumsiness of a child who trips and starts to cry.

I’m interested in the literate art of deriving pleasure from an AI-assisted work that the centaur-author intended to put in, or at least wouldn’t object to, or even be flattered by (some non-adversarial kinds of recoding achieve this). Friendly recodings.

A good test of this is whether your recoding (even when adversarial) engages with the authorial intent rather than the AI’s current limitations.

For example, if you read my Signal Under Innsmouth short story, I want you to get something like the pleasure of reading Lovecraft out of it. If you only enjoyed it as camp, or as a pile of LLM tropes, I failed. I myself got a particular toy-making pleasure out of that — learning style-transfer/mood transposition techniques.

Similarly, I want you to enjoy The Poverty of Abundance primarily as a polemic against the Abundance movement (or hate it for attacking the movement because you support it), not in some anti-AI recoded way. I myself got a different toy-making pleasure out of it — learning to prime and unleash an authorial voice that is not only not mine, but explicitly written at me.

So we are talking about the literacy required to experience the intended ludic immersion potential in a piece, via recodings that harmonize. We are not talking about adversarially recoded meta-reading focused on the AI’s limitations.

Interrogative vs. Perspectival Play

Of the two basic play modes I defined, interrogative and perspectival, the latter is both easier to do and better supported technologically.

This is because it is easier to get your dose of ludic-immersive pleasure from the text directly, and use the LLM for supporting sorts of pleasure that may be uneven, and harmonize well or poorly with the main reading experience.

Perspectival play is an extension of the kind of pleasure you get from using Google or Wikipedia to go down bunny trails suggested by the main text. But with an LLM, you can also explore hypothesis, ask for a “take” from a particular angle or level of resolution, and so on. The LLM becomes a flexible sort of camera, able to “photograph” the context of the text in varying ways, with various sorts of zooming and panning.

Google’s integrated LLM summaries at the top of search results, by the way, are getting surprisingly good. I like them now, and I definitely didn’t early on. Sure, they still entirely mis-infer the intentions of queries sometimes, but those are easy to get past if you treat them as “toy search results.”

Perspectival play also really levels-up what was a low-pleasure remedial literacy activity in the past — looking up words in the dictionary. I never enjoyed that, preferring to mostly guess meanings by context and moving on. But with LLMs, diving into etymological nuances becomes a whole new class of pleasurable side quests.

Interrogative play works better with technical materials right now, for two reasons. First, the texts are typically less engineered for pleasure to begin with. Academic papers are rarely fun to read (the big paper behind all this technology, Attention is All You Need, is horribly painful to read, despite its alluring headline). But LLM-assisted reading can produce a layer of fun as a friendly recoding.

Interrogative play with LLMs lets you access ideas beyond your technical level, with ELI5 prompts, requests for illustrative examples and metaphors, etc. These can all be pleasurable to read. Similarly, while summarization is usually a very functional process, when done with some stylistic flourishes (“summarize this for me in Good Fellas gangster talk”), it can become fun.

When I read technical papers in areas beyond my expertise, or texts that are simply not fun, I rely mostly on the LLM, whether in interrogative or perspectival mode. When I read well-written general audience books, or well-written specialized texts within areas have I have sufficient expertise, I rely mostly on my own reading, but use the perspectival play for both utilitarian support and derivative pleasures like exploring fun bunny trails.

Well-written here has a specific meaning — the writer has invested significant skilled effort into making the reading pleasurable that is worth trying to access. A nearly necessary, but not sufficient condition is that the writer has taken pleasure in the toy-making.

Complex Play Modes

More complex modes of using AIs to read exist of course, and more will be invented.

A very basic but surprisingly complex one is simply continuing a chat somebody else has started for you.

Kit Mode

With friends, often I don’t bother writing anything or producing any kind of output. I just initiate a chat with sharing in mind, and use my (toy-maker) context to prime the chat session with the right series of initial prompts, and then hand it off to others (the toy players) to take where they will.

This mode could be called kit-mode. In toy-engineering terms, it’s a bit like the build-operate-transfer, or BOT pattern in big industrial deals — somebody builds a factory for you, runs it for a while to work out the kinks, then hands it over to you. Or if you don’t mind the paternalism, it’s like helping a child play with a toy that’s just beyond their skill level to play with by themselves.

This can and should be a form of publishing soon. ChatGPT allows you to share links and make them publicly discoverable, but the UX is not great. I’d like a Substack for chat sessions (something Substack itself could support, but I doubt they will — they seem heavily invested in building sail ships well into the steamship era). Kit-mode publishing will likely resemble the publishing of games more than the publishing of books.

Articulated Texts

Another mode, that I haven’t worked with at all, is producing textual hyperobjects that require a bit of software to properly articulate, such as an essay with a UI and a bunch of knobs that allows the reader to dynamically reshape the default text within a parameterized space. A simple example would be a one-page app essay with a few styling buttons (how about Hemmingway, Shakespeare, Agatha Christie, Dr. Seuss buttons for a story?).

One play mode I want to learn to toy-make, is mechanical manipulation in the sense of 3d puzzle boxes or assembling LEGO models, guided by pictures, telegraphic instructions, and loose parts. Not just kit mode, but construction kit mode. I don’t yet quite know how to do that purely with text (ie, without code), but an illustration of what I’m talking about can be found in experimental TV shows like Bandersnatch or Kaleidoscope. These are workable versions of the choose-your-own-adventure branching narrative structure that never quite worked in the paper-book form factor. I think they can only really be done with AI-based reading modes.

Extended Universes and Beyond

The “final boss mode” is writing to create entire extended universes that readers can explore. These currently are painful to create with unassisted text, and generally require entire large communities working collaboratively (such as SCP) to pull off. I think we’ll soon see single-author extended text universes that are as fun to get into as MCU.

Things are only going to get more complex.

For example, ChatGPT is very good at playing a version of the Glass Bead Game from the Hermann Hesse novel. I could start a well-crafted GBG session on the topic of sociopathy, and hand it off to you to play with.

All these modes, I think, merely scratch the surface of what’s possible with reader experiences. What kinds of text toys can be invented and distributed. To a large extent, our imagination today is still significantly limited by our legacy experiences.

AI-assisted reading and writing is an augmented textuality. It is a particularly powerful one that requires radical conceptual metaphors like texts as toys to fully comprehend, but augmented textualities are not themselves a new thing.

They never quite worked very well before AI though, and it is worth considering two in detail to understand why: Speed-reading and summarization.

Speed-Reading

When I was a kid in the 80s, magazines would often feature ads for “speed-reading” correspondence course (online courses of their time), promising you blitz-reading skills. I never tried these, since I was fine with my reading pace, but as far as I could tell, it was usually some systematization of common-sense orientation and scanning behaviors, plus fine-tuning of intuitive skipping/jumping/backtracking/note-taking behaviors. It seems to work for some people.

For a while in the aughts and 10s, people tried to transpose speed-reading to apps, with display window chunking, word highlighting etc. I personally think none of it ever really works because you can’t really decouple semantics from access mechanics that easily. Your reading rate is a function of your prior knowledge, short-term working memory, and comprehension aptitudes.

But speed-reading really can be done with AI help, in many different ways, through the various play modes I discussed.

Summarization

Another augmented textuality is summarization.

Summarization by other people (Cliff notes, executive summaries) never quite worked outside of artificial incentive contexts like cramming for an exam or an executive trying to fake being well-read at a party, because summarization cannot be decoupled from personal micro-attention priorities.

By micro-attention priorities I mean priorities at the level of “I pick up on the interleaving of sensory and conceptual detail” or “I read books for the anecdotal vignettes,” rather than macro-level instrumental priorities like “give me the bottomline and actionable insights.”

Both levels of summarization require deeper personal knowledge of the target reader to be effective, especially if you want to convey some of the ludic-immersion potential of the text (the primary way today is selected quotations).

Access and Articulation Mechanics

The rather disreputable (and non-ludic) nature of pre-AI speed-reading and summarization textualities can obscure the fact that both are in fact very natural human reading behaviors, not some sort of travesty or sacrilege. You do it intuitively and unconsciously even when you think you’re worshipfully close-reading a text in “deep work” mode.

Once you “get” the logic of a text in the first couple of pages, you automatically start varying your velocity (“speeding up” and “slowing down”) and summarizing for yourself, by building up a kind of document object model in your head.

A simple example is reading a murder mystery. You’re building up a mental model of the characters, clues, and situational logic as you read, and you may speed up to try and get to the next obvious clue, or slow down to savor a well-crafted paragraph. This is a fact that good mystery writers exploit by burying clues in parts impatient readers tend to speed through, turning a reader weakness into a textual strength, by cashing out that impatience as greater surprisal in the end.

You can’t enjoy murder mysteries if you don’t speed-read and summarize subconsciously along the way! You’ll spoil it for yourself!

It is in fact close reading that is the highly artificial behavior. It’s like code-stepping for debugging in software development.

Speed-reading and summarization tools and behaviors, ranging from parallel note-taking and mind-mapping, to using summaries by other humans and machines, merely build on a natural, in-your-head process.

Speed reading exploits the affordances of what we might call the access mechanics of a text, comparable to how a magnetic disk is set up for the read/write head to access.

Summarization exploits the affordances of what we might call articulation mechanics of a text — how the parts are set up and move relative to each other.

Sometimes access and articulation mechanics are legible (lots of headings and sub-headings, “characters” move through a “plot”) and other times, it is illegible (the writer inserts foreshadowings, callbacks, topic sentences, repetitions, and other devices designed to aid both natural unconscious summarization and mechanically assisted versions).

Design for Playability

Access mechanics and articulation mechanics are two major elements of design for readability.

There are many others of interest to writers, but I’m pointing these two out both to illustrate aspects of the craft that carry over well to the toy metaphor, as design for playability. And also to give you a taste of the degree to which apparently sacrilegious and disrespectful ways of engaging with texts are in fact natural, necessary, unavoidable, and anticipated and designed for by good writers.

With or without AI, I want you to read my writing as slowly or fast as is fun for you. I want you to summarize or not as works best for you.

AIs combine access and articulation mechanics in a particularly powerful way. They animate texts the way video games and powered toys animate the access and articulation affordances of board games and unpowered toys.

One reason older augmented textualities never quite worked is that they lacked an ability to engineer access and articulation mechanisms that were content-sensitive as well as reader-context sensitive. LLMs have solved that problem.

As a result, design for playability is now a real thing with texts.

The Rhetoric of the Text-Toy

A pre-AI augmented textuality that did work is hypertext. Unlike augmented textualities fundamentally rooted in print culture, like speed-reading and summarization, hypertext could be content-sensitive and reader-context sensitive in ways older technologies could not. But arguably, the hypertext era never reached its full potential, and now it never will.

Looking back at The Rhetoric of the Hyperlink, I am struck by the extent to which the evolution of the web after 2009 did not realize the potential of the rhetorical modes I was rhapsodizing about. The evolution of hypertext stalled well short of its full potential, just a few years later.

After the first burst of innovation around blogs and wikis, pushing the boundaries of hypertext turned into a marginal hobby. Reading and writing hypertext never became a fully developed culture, and by around 2013, hypertext had stalled.

More than LLMs (which only delivered the coup de grace a decade later), it was a wave of reactionary cultural sentiment that made hypertext almost a stillborn medium. Starting in the mid 2010s, thanks in part to the culture war, we experienced a swing-back towards faux-print linear texts, and a retreat to print-era devices and aesthetics like footnotes. Blogging and wiki-ing stalled, and newsletter platforms like Substack installed a nostalgic print-like textuality that resists hypertext. It even discourages internal linking within a corpus, hijacking it with embeds that reflect the platform’s rhetorical priorities rather than the author’s.

A traditionalist wave of writers turned text technology towards nostalgic priorities, creating beautifully laid out print-like online texts, online “books,” and so forth.

Instead of evolving beyond the stream metaphor, text retreated to the document metaphor, in the form of an entire crop of what I’d call reactionary textualities.

One exception to the wave of reactionary textualities was the brief, arrested rise of notebook and content-garden technologies like Roam and Obsidian. If AI hadn’t been invented, that would have been the future.

But AI was invented. Hypertext is now being displaced for the best possible reason — a superior technology has come around. We do not have to resign ourselves to living in reactionary textualities or pre-AI hypertextualities.

Hypertext was great for its time. It can unbundle and rebundle, atomize and transclude, and link densely or sparsely. On the human side, hypertext is great at torching authorial conceits, medieval attitudes towards authorship and “originality” and “rights,” and property-ownership attitudes towards what has always been a commons.

LLMs are better at all of this than hypertext ever was.

What I called the text renaissance in 2020 is still coming taking shape. The horizon has just shifted from hypertext to AI. So you just have to look in a different direction to spot it. And approach it ready to play.

I think this (LLMs as playful engineering) will white pill me the way the Gervais principle black pilled me around a decade ago. Cyberspace is healing 😌

Does being good at writing with AI mean you’re having fun while doing it or that what you’ve written is good? It sounded distinctly like the former, that that was the key message, but it became less clear and perhaps even went back and forth.